Throughput Testing – VM’s between Hypervisors

My testing of Virtual Machine to Virtual Machine throughput demonstrated that with minimal tuning, considerable throughput can be obtained between virtual machines running on the same Hypervisor.

Clearly, the next step was to test throughput between Hypervisors… I had planned on running some initial tests, then based on the results, going “all out” and configuring SR-IOV as needed to obtain as close to wire speed as possible, but initial testing generated some great results…

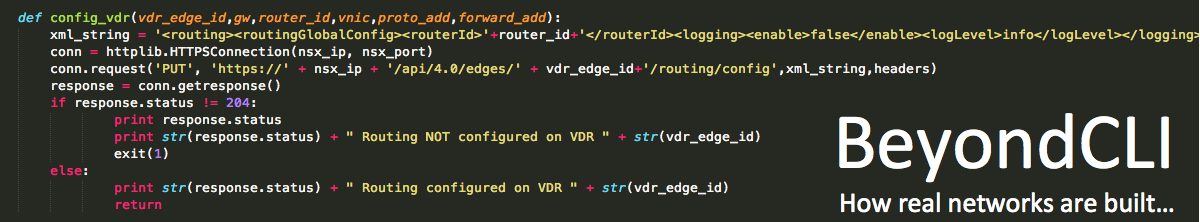

First off, given the results from the previous round of tests, I wanted to be sure I started with a configuration I felt would work well. I led with the following Windows OS and NTttcp configuration:

2012 R2 Adapter Receive Side Scaling Enabled – 4 Processes

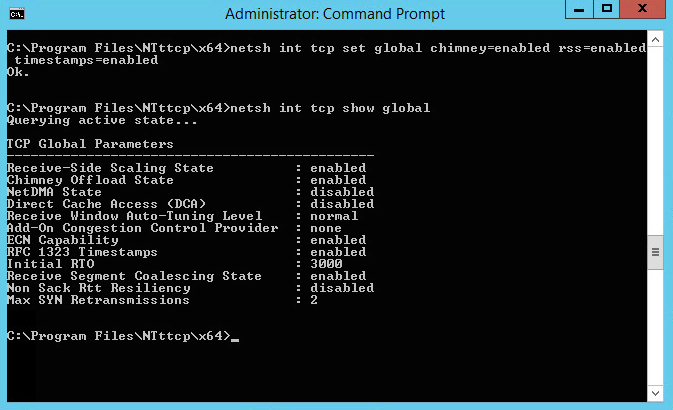

2012 R2 global netsh parameters: netsh int tcp set global chimney=enabled rss=enabled timestamps=enabled http://support.microsoft.com/kb/951037

NTttcp testing using 1 and 4 streams as follows: NTttcp.exe -r -wu 5 -cd 5 -m 1,*,192.168.10.10 -l 64K -t 60 -sb 1024K -rb 1024K

At this point, my plan was to perform some initial testing then based on the results, start to tweak the Virtual Machine and Hypervisor settings, then follow with an SR-IOV configuration to validate maximum performance by dedicating a NIC to the Virtual Machine, but the results changed my plans.

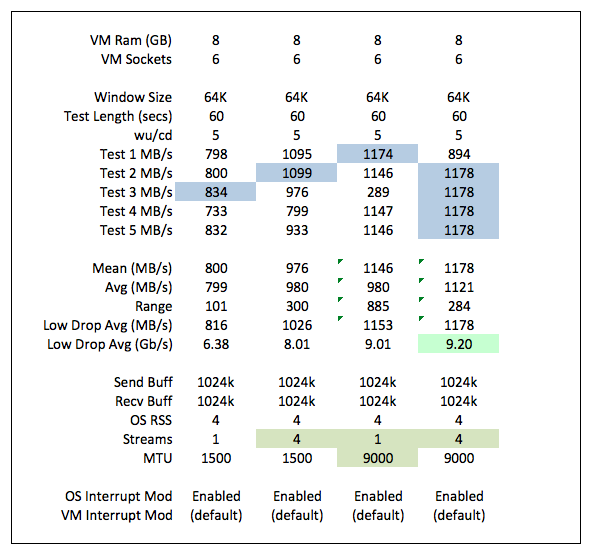

Here is my initial results:

Link to above excel results file.

My first test using 1 stream and a 1500 byte MTU achieved a very respectable 6.38 Gbps. Increasing the number of streams or increasing the MTU allowed 8+ Gbps to be achieved without any additional VM or Hypervisor configuration.

Very anticlimactic, but certainly the initial testing of VM to VM on a single hypervisor identified an optimal test configuration and the results were duplicated when using a physical network between two Hypervisors.

One gotcha I experienced was my initial 9000 MTU tests were awful and a quick check found one of the Hypervisor standard switches configured for 1500 MTU while the other was correctly set for 9000 MTU. A quick switch MTU change and testing results were in line with my expectations.

Attached are screen shots showing physical NIC status from a Hypervisor perspective –

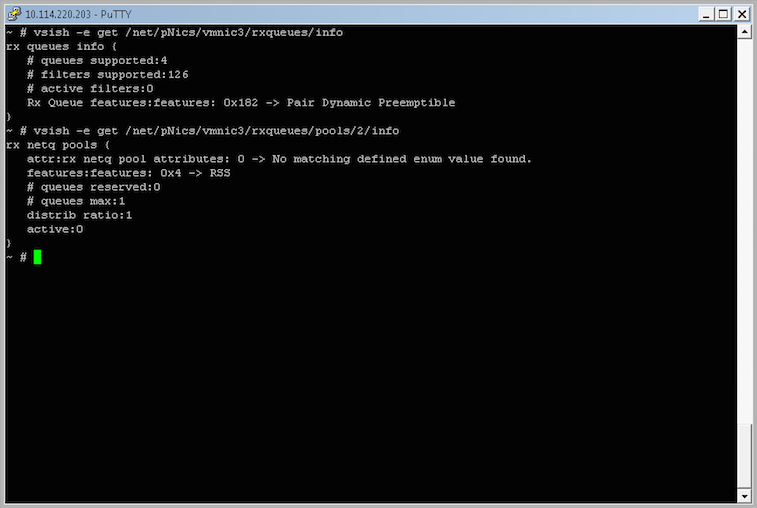

vsish -e commands to show vmnic3 parameters:

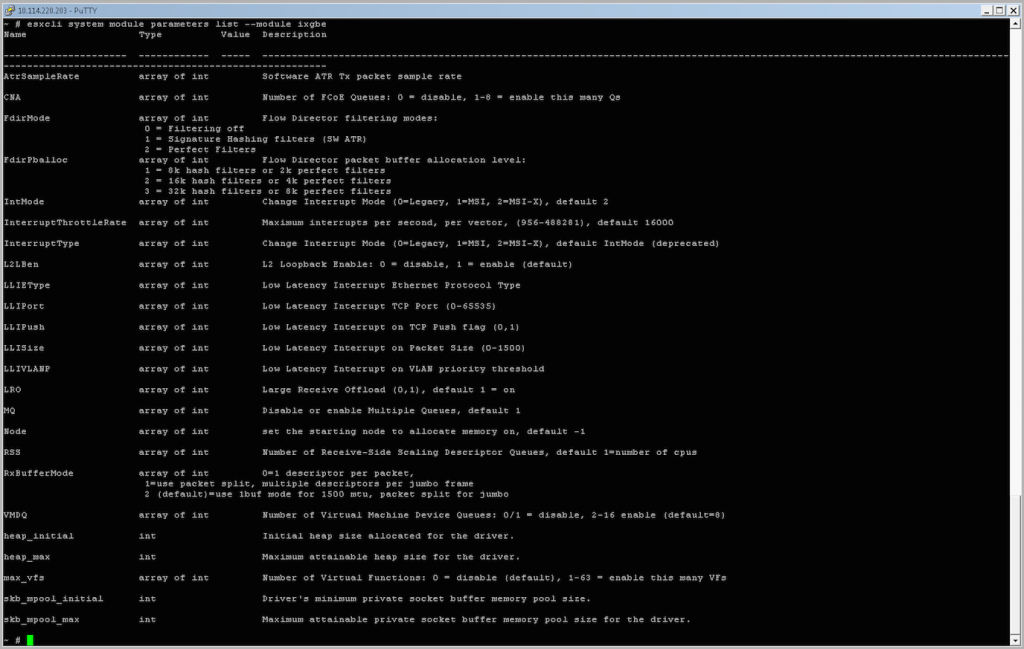

esxcli system module parameters list showing configuration of the 10GbE adapter:

I am certainly delighted with the tests results achieved without any special Hypervisor NIC accommodations, and the capabilities of VM’s to easily drive these NICS to wire speed. I do plan on running some future SR-IOV tests. All good fun 😉