Throughput Testing – VM’s between Hypervisors with SR-IOV

Throughput testing over 10 GbE has been an interesting exercise and Virtual Machines using the VMXNET3 adapter achieve impressive throughput while maintaining complete mobility around a VMware Cluster (vMotion/HA/DRS).

The following series of tests validate throughput when using VM’s configured to use SR-IOV…

First, some background on Single Root IO Virtualization or SR-IOV – Scott Lowe has written a nice introduction to SR-IOV, while Intel has provided a nice technology primer detailing why SR-IOV was created…

Using SR-IOV, PCIe devices such as NIC cards can appear to be multiple separate physical PCIe devices. This allows a “piece” of the PCIe device to be dedicated to Virtual Machines. When configurating SR-IOV, you select how many Virtual Functions (VF’s) or devices you want to create. The benefit of SR-IOV is direct access to the hardware for improved performance.

Now the bad news. By configuring SR-IOV, you are locking the VM to the hardware and thus you lose Virtual Machine mobility capabilities such as HA and DRS. There are still benefits to configuring Virtual Machines with SR-IOV… High performance access to hardware while also supporting hardware virtualization. The Hypervisor will support a mixture of SR–IOV enabled Virtual Machines and non SR-IOV Virtual Machines.

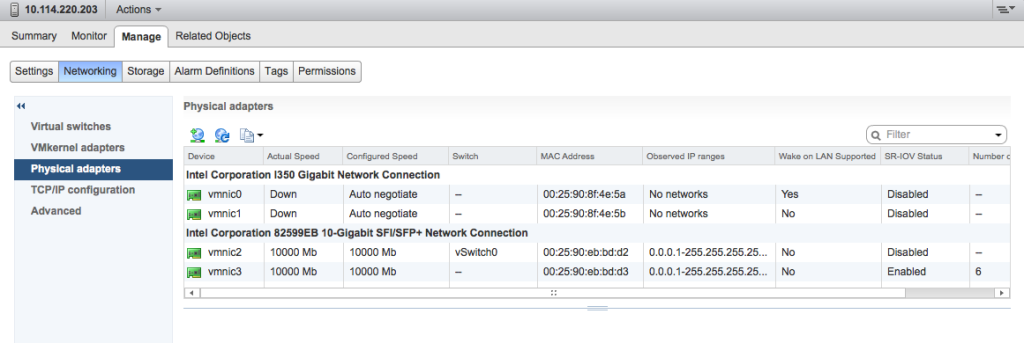

Configuring SR-IOV is documented in the vSphere 5.5 Documentation and covers both Hypervisor and Virtual Machine configuration. The attached screenshot shows my VMNIC3 configured for SR-IOV Enabled and 6 Virtual Functions:

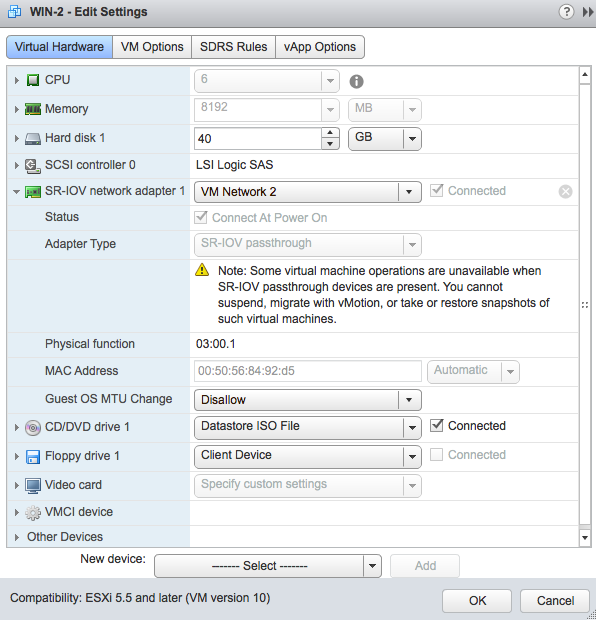

To configure SR-IOV on a Virtual Machine:

- Virtual Machine configuration requires an update to Hardware Version 10.

- All Virtual Machine RAM needs to be reserved.

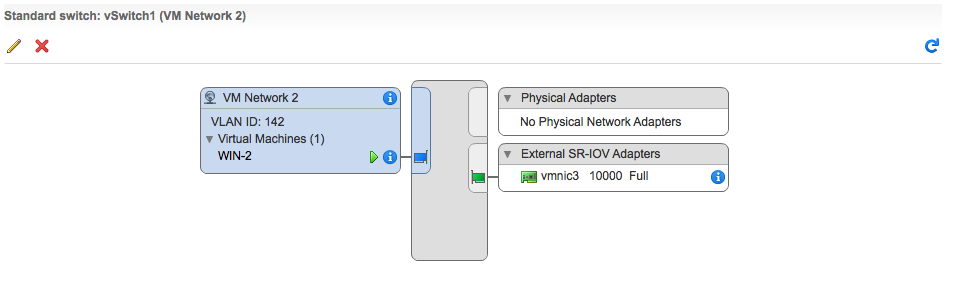

- There should be a Virtual Switch Port Group which is configured with MTU and VLAN that matches your VM’s network requirements.

- Any existing network adapter is deleted, and a new network adapter is added. Adapter type is SR-IOV Passthrough. Note the port group specified and the VM restrictions:

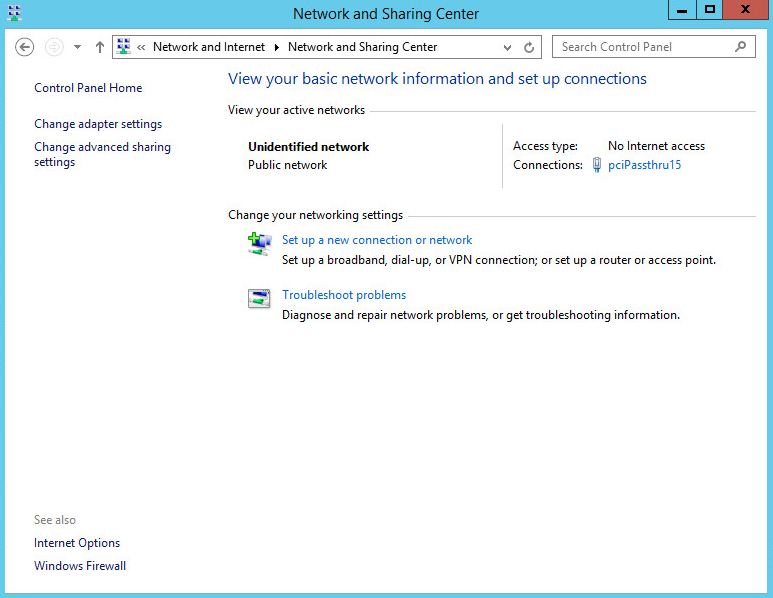

Once configured and the VM started, the following shows what the NIC looks like to Windows:

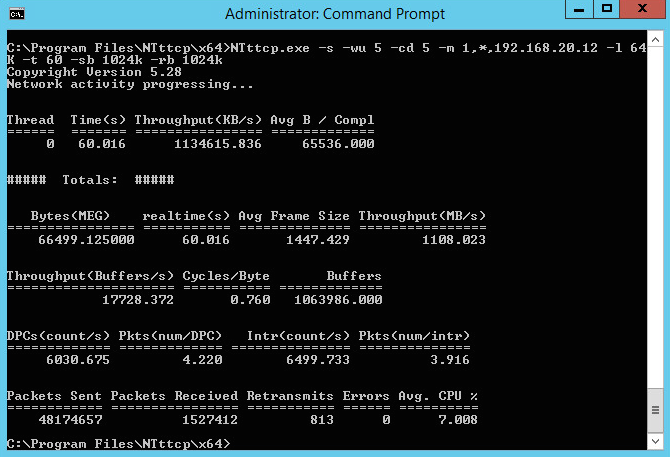

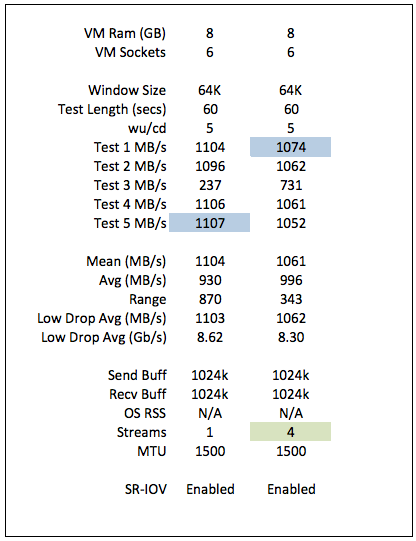

Now for testing… Very brief and very impressive with close to wire speed achieved using only a single 64K stream and a 1500 byte MTU. I increased to 4 streams but really no difference in throughput which is to be expected.

Tests used the following NTttcp configuration:

Client: NTttcp.exe -s -wu 5 -cd 5 -m 1,*,192.168.20.12 -l 64k -t 60 -sb 1024k -rb 1024k

Server: NTttcp.exe -r -wu 5 -cd 5 -m 1,*,192.168.20.12 -l 64k -t 60 -sb 1024k -rb 1024k

In summary, SR-IOV provides Virtual Machines direct access to hardware providing high performance, but with the loss of some Virtual Machine mobility. Happy to say I need to start looking for 40 GbE servers to see where the next bottleneck resides 😉

Update:

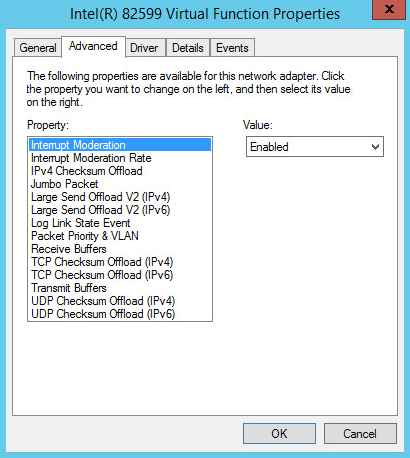

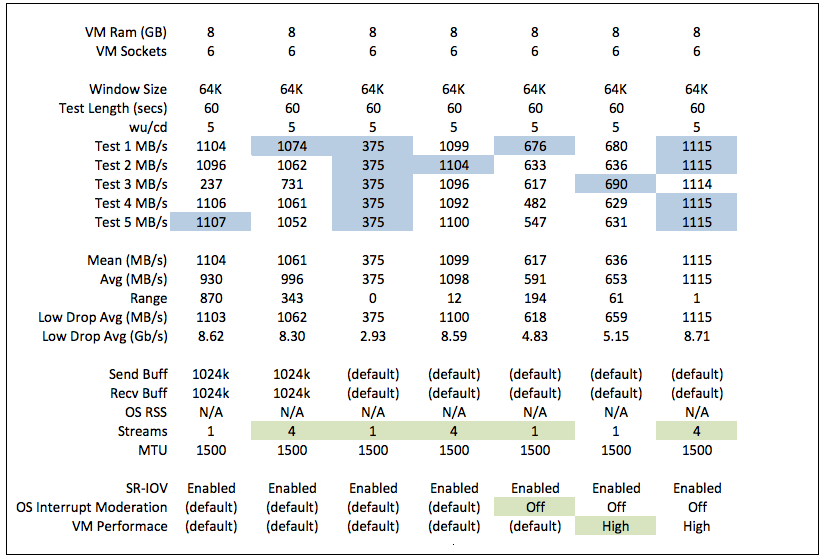

I was asked to run some more tests with default buffer parameters… No problem but the caution I would provide is applications do not write single streams and various applications do provide for TCP/IP buffer tuning to account for high performance NICs. Testing with default buffers shows a single stream is capable of around 5Gbps with some additional OS and VM optimizations – Disabling OS Interrupt Moderation on the passthrough NIC, and changing the VM Latency Sensitivity to High.

Default tests used the following NTttcp configuration:

Client: NTttcp.exe -s -wu 5 -cd 5 -m 1,*,192.168.20.12 -l 64k -t 60

Server: NTttcp.exe -r -wu 5 -cd 5 -m 1,*,192.168.20.12 -l 64k -t 60