NSX and Virtual Machine Sprawl

During a recent customer discussion on NSX, I was asked about Virtual Machine sprawl in an NSX environment. This was a great question and cuts to the heart of Network Virtualization and how network functions are implemented in a virtualized environment…

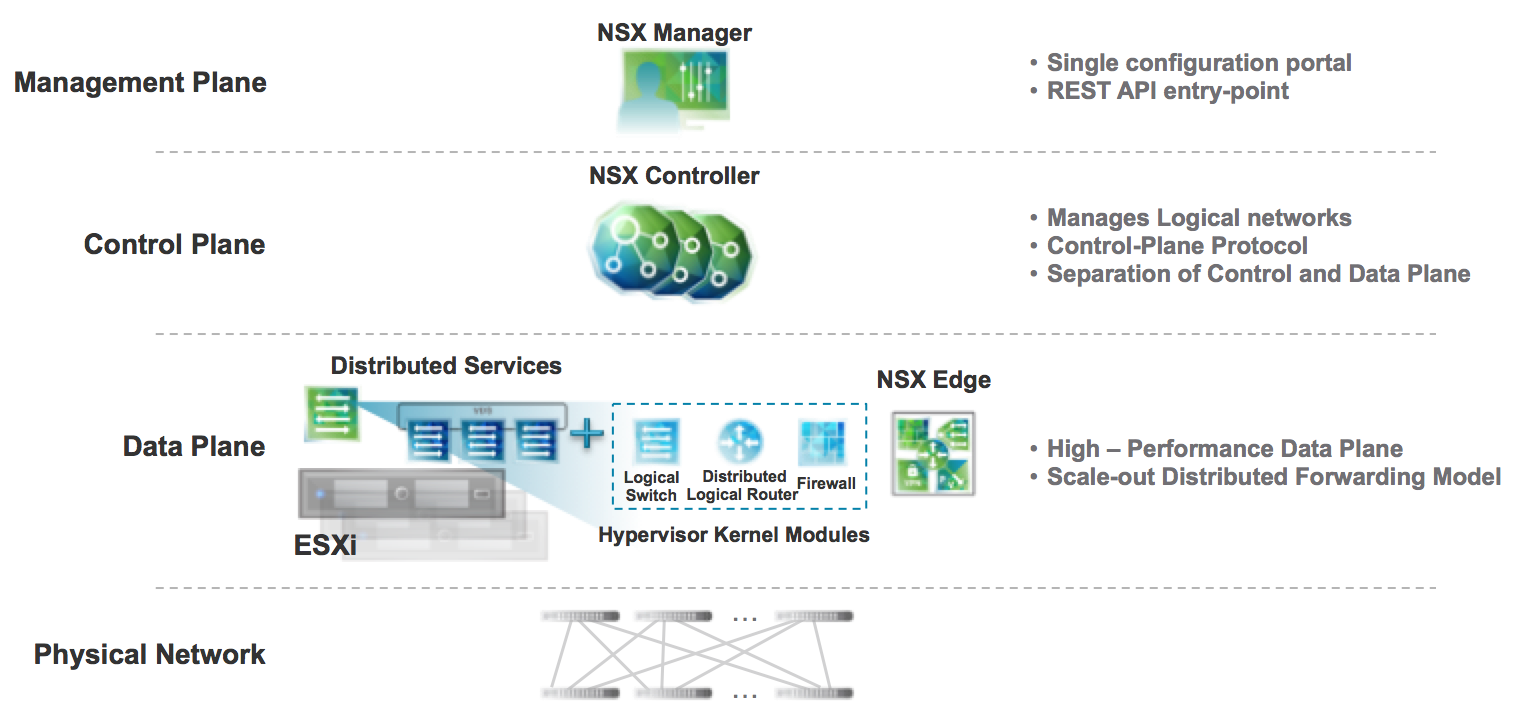

Management Plane / Control Plane / Data Plane

Traditional hardware based networks require compute capability in various forms including redundant supervisor cards, line cards, and their various combinations of daughter boards. Certainly my experiences with Cisco 6500’s and MSFC’s, PFC’s, DFC’s, and associated compatibility kept me very busy… https://supportforums.cisco.com/document/85621/understanding-msfc-pfc-and-dfc-roles-catalyst-6500-series-switch

In the virtualized world, compute capability is implemented as physical x86 workloads, virtual x86 workloads (user space VM’s), or kernel modules within the hypervisor (kernel space).

So how much virtual machine “sprawl” exists in an NSX environment? Compared to a physical environment with supervisor cards, line cards, daughter boards, and associated compatibility matrices, I would say very little.

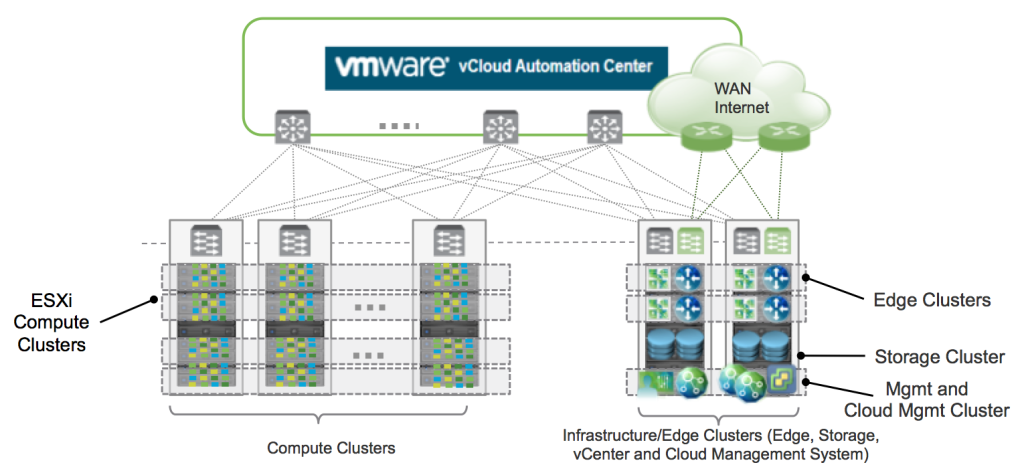

An NSX deployment includes a Management Plane (1 VM for NSX Manager), Control Plane (3 VM’s for Controllers), and a Data Plane (Kernel Modules in each Hypervisor). So a total of 4 VM’s so far, and these VM’s would be deployed in a shared management cluster with other VM’s associated with the management of a vSphere environment.

The data plane also requires VM’s associated with North/South Edge services (2+ VM’s), and control VM’s associated with each Logical Distributed Router ( 1-2 VM’s). Given a Logical Distributed Router supports up to 1000 interfaces, only 10 VM’s ( 2 VM’s x 5 LDR’s) would be required to create 10,000 logical networks.

Enterprise Network VM “Sprawl”

An Enterprise network supporting 1,000 Virtual Switches and 20 Gbps North/South Bandwidth would require a grand total of 8 VM’s to support NSX Management, Data, and Control Plane functions.

Scaling up an Enterprise network to vSphere logical switch maximums of 10,000 logical switches, combined with 80 Gbps of North/South bandwidth would require a total of 22 VM’s to support NSX Management, Control, and Data Plane functions.

NSX Management, Control, and Data Plane VM’s are normally spread across multiple hypervisors for redundancy purposes in a management cluster, but a management cluster in an Enterprise network could consist of only 3-4 1/2U blades.

Service Provider Network “Sprawl”

The only difference in Enterprise networks and Service Provider networks is the amount of tenant isolation that may be required. For every tenant, the service provider may decide to provide dedicated North/South Edge Services (2 VM’s) and/or a dedicated Logical Distributed Router (2 VM’s).

This would mean 4 VM’s for the management and control plane, and 4 VM’s for each tenant assuming 20Gbps North/South bandwidth, and a dedicated Logical Distributed Router. Service Providers may also choose to only provide tenants with LDR isolation reducing the number of VM’s required per tenant down to 2.

Network Function Virtualization

NSX supports Network Function Virtualization and features like DHCP, Load balancing, SSL VPN, and L2 VPN can now be deployed as part of an application orchestration workflow. For any application blueprint, additional NSX VM’s may be added to the blueprint to support application network functions. This would likely be no more than 1-2 VM’s per application.

So in summary, yes there are VM’s and Kernel Modules associated with NSX but certainly there is a finite number of VM’s associated with the Management, Control, and Data plane aspects of NSX, and the specific virtualization of network functions associated with application blueprints.